Abstract

We address the challenge of task-oriented navigation in unstructured and unknown environments, where robots must incrementally build and reason on rich, metric-semantic maps in real time. Since tasks may require clarification or re-specification, it is necessary for the information in the map to be rich enough to enable generalization across a wide range of tasks. To effectively execute tasks specified in natural language, we propose a hierarchical representation built on language-embedded Gaussian splatting that enables both sparse semantic planning that lends itself to online operation and dense geometric representation for collision-free navigation. We validate the effectiveness of our method through real-world robot experiments conducted in both cluttered indoor and kilometer-scale outdoor environments, with a competitive ratio of about 60% against privileged baselines.

Video

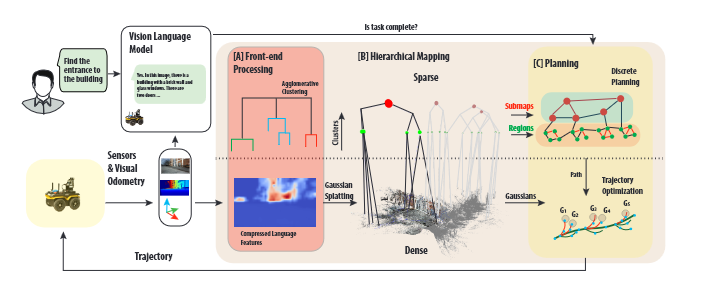

System Overview

Our framework consists of three components. The front-end processing [A] extracts and compresses dense pixel-level language features from the image. The module also clusters features based on geometry and semantics in the map. The hierarchical mapper [B] runs bottom-up, ingesting the RGB and depth images and the odometric path from the robot to build a map. The top level of the map contains the submaps, the middle level the regions, and the bottom level the objects. The local map compsises the loaded submaps. The other submaps are unloaded to save memory (shown here in gray). The planning module [C] consists of a discrete planner that operates on the sparse map and generates a reference path, while the dense Gaussians in the local map are used to find the trajectory to be executed on the robot.

BibTeX

@misc{ong2025atlasnavigatoractivetaskdriven,

title={ATLAS Navigator: Active Task-driven LAnguage-embedded Gaussian Splatting},

author={Dexter Ong and Yuezhan Tao and Varun Murali and Igor Spasojevic and Vijay Kumar and Pratik Chaudhari},

year={2025},

eprint={2502.20386},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2502.20386},

}